WMR input and interaction concepts

All Windows Mixed RealityA mixed reality platform developed by Microsoft, built around the API of Windows 10. More info

See in Glossary devices use specific forms of interaction to take input from the user. Some of these forms of input are specific to certain Windows Mixed Reality devices, such as HoloLensAn XR headset for using apps made for the Windows Mixed Reality platform. More info

See in Glossary or immersive headsets.

Immersive headsets use a variety of inputs, including spatial controllers. HoloLens is limited to using 3 forms of input, unless extra hardware is required by the application to be used in addition to the headset.

All forms of input work with Windows Mixed Reality immersive headsets. Gaze works in VR, gestures trigger when you use the Select button on the controller, and voice is available when the end user has a microphone connected to their PC.

Input on HoloLens is different from other platforms because the primary means of interaction are:

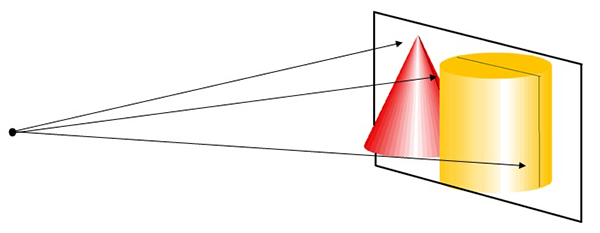

- Gaze: Controls the application by tracking where the user is looking in the world.

- Voice: Short, spoken commands, and longer free-form dictation, which can be used to issue commands or trigger actions.

- Gesture: Hand signals or controller input trigger commands in the system.

Gaze (all devices)

Gaze is an input mechanism that tracks where a user is looking:

On HoloLens, this is accurate enough that you can use it to get users to select GameObjects in the world. You can also use it to direct commands at specific GameObjects rather than every GameObjectThe fundamental object in Unity scenes, which can represent characters, props, scenery, cameras, waypoints, and more. A GameObject’s functionality is defined by the Components attached to it. More info

See in Glossary in the SceneA Scene contains the environments and menus of your game. Think of each unique Scene file as a unique level. In each Scene, you place your environments, obstacles, and decorations, essentially designing and building your game in pieces. More info

See in Glossary.

For more information, see Microsoft’s documentation on Gaze indicator and Gaze targeting.

Voice (all devices)

Windows 10 API provides voice input on both HoloLens and immersive devices. Unity supports three styles of input:

Keywords: Simple commands or phrases (set up in code) used to generate events. This allows you to quickly add voice commands to an application, as long as you do not require localization. The KeywordRecognizer API provides this functionality.

Grammars: A table of commands with semantic meaning. You can configure grammars through an XML grammar file (.grxml), and localize the table if you need to. For more information on this file format, see Microsoft’s documentation on Creating Grammar Files. The GrammarRecognizer API provides this functionality.

Dictation: A more free-form text-to-speech system that translates longer spoken input into text. To prolong battery life, dictation recognition on the HoloLens is only active for short periods of time. It requires a working Internet connection. The DictationRecognizer API provdes this functionality.

HoloLens headsets have a built in microphone, allowing voice input without extra hardware. For an application to use voice input with immersive headsets, users need to have an external microphone connected to their PC.

For more information about voice input, refer to Microsoft’s documentation on Voice design.

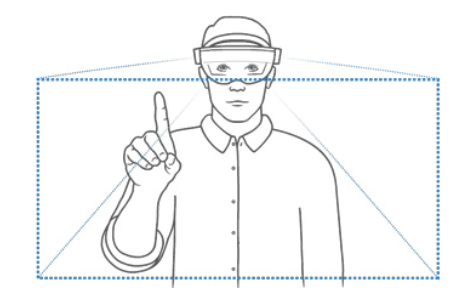

Gestures (all devices)

A gesture is a hand signal interpreted by the system or a controller signal from a Spatial Controller. Both HoloLens and immersive devices support gestures. Immersive devices require a spatial controller to initiate gestures, while HoloLens gestures require hand movement. You can use gestures to trigger specific commands in your application.

Windows Mixed Reality provides several built-in gestures for use in your application, as well as a generic API to recognize custom gestures. Both built-in gestures and custom gestures (added via API) are functional in Unity.

For more information, see Microsoft’s documentation on gestures.

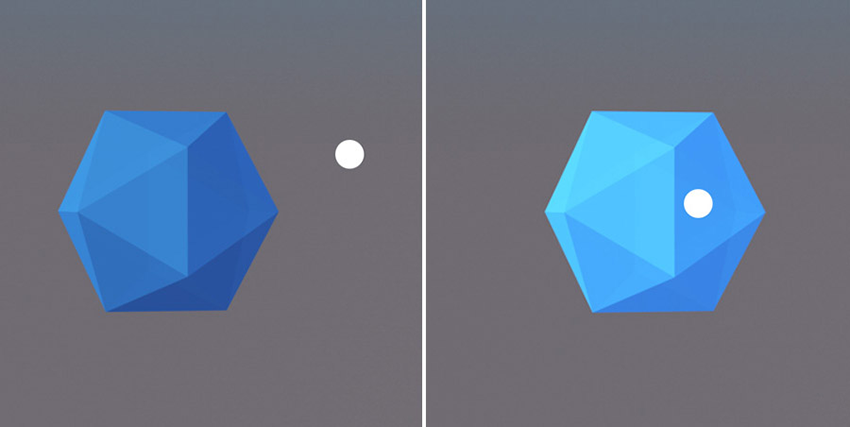

Focus Point (all devices)

Windows Mixed Reality uses a process called Late Stage Reprojection (LSR) to compensate for movement of the user’s head between the time a frame is rendered and when it appears on the display. To compensate for this latency, LSR modifyies the rendered image according to the most recent head tracking data, then presents the image on the display.

The HoloLens uses a stabilization plane for reprojection (see Microsoft documentation on plane-based reprojection). This is a plane in space which represents an area where the user is likely to be focusing. This stabilization plane has default values, but applications can also use the SetFocusPoint API to explicitly set the position, normal, and velocity of this plane. Objects appear most stable at their intersection with this plane. Immersive headsets also support this method, but for these devices there is a more efficient form of reprojection available called per-pixel depth reprojection.

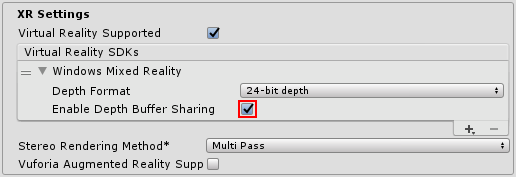

Desktop applications using immersive headsets can enable per-pixel depth reprojection, which offers higher quality without requiring explicit work by the application. To allow per-pixel depth reprojection, open the Player Settings for the Windows Mixed Reality, go to XR Settings > Virtual Reality SDKs and check Enable Depth Buffer Sharing.

When Enable Depth Buffer__ Sharing__ is enabled, ensure applications do not explicitly call the SetFocusPoint method, which overrides per-pixel depth reprojection with plane-based reprojection.

Enable Depth Buffer Sharing on HoloLens has no benefit unless you have updated the device’s OS to Windows 10 Redstone 4 (RS4). If the device is running RS4, then it determines the stabilization plane automatically from the range of values found in the depth buffer. Reprojection still happens using a stabilization plane, but the application does not need to explicitly call SetFocusPoint.

For more information on how the HoloLens achieves stable holograms, see Microsoft’s documentation on hologram stability.

Anchors (HoloLens only)

Anchor components are a way for the virtual world to interact with the real world. An anchor is a special component that overrides the position and orientation of the Transform componentA Transform component determines the Position, Rotation, and Scale of each object in the scene. Every GameObject has a Transform. More info

See in Glossary of the GameObject it is attached to.

WorldAnchors

A WorldAnchor represents a link between the device’s understanding of an exact point in the physical world and the GameObject containing the WorldAnchor component. Once added, a GameObject with a WorldAnchor component remains locked in place to a location in the real world, but may shift in Unity coordinates from frame to frame as the device’s understanding of the anchor’s position becomes more precise.

Some common uses for WorldAnchors include:

Locking a holographicThe former name for Windows Mixed Reality. More info

See in Glossary game board to the top of a table.Locking a video window to a wall.

Use WorldAnchors whenever there is a GameObject or group of GameObjects that you want to fix to a physical location.

WorldAnchors override their parent GameObject’s Transform component, so any direct manipulations of the Transform component are lost. Similarly, GameObjects with WorldAnchor components should not contain RigidbodyA component that allows a GameObject to be affected by simulated gravity and other forces. More info

See in Glossary components with dynamic physics. These components can be more resource-intensive and game performance will decrease the further apart the WorldAnchors are.

Note: Only use a small number of WorldAnchors to minimise performance issues. For example, a game board surface placed upon a table only requires a single anchor for the board. In this case, child GameObjects of the board do not require their own WorldAnchor.

For more information and best practices, see Microsoft’s documentation on spatial anchors.

2018–03–27 Page published

New content added for XR API changes in 2017.3