Camera

Switch to ScriptingCamerasA component which creates an image of a particular viewpoint in your scene. The output is either drawn to the screen or captured as a texture. More info

See in Glossary are the devices that capture and display the world to the player. By customizing and manipulating cameras, you can make the presentation of your game truly unique. You can have an unlimited number of cameras in a sceneA Scene contains the environments and menus of your game. Think of each unique Scene file as a unique level. In each Scene, you place your environments, obstacles, and decorations, essentially designing and building your game in pieces. More info

See in Glossary. They can be set to render in any order, at any place on the screen, or only certain parts of the screen.

Properties

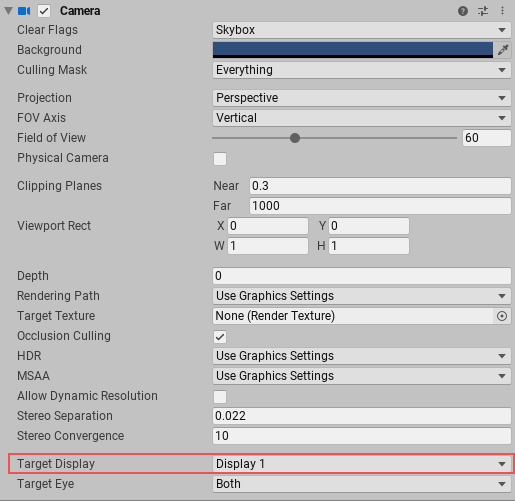

Unity displays different properties in the Camera InspectorA Unity window that displays information about the currently selected GameObject, asset or project settings, allowing you to inspect and edit the values. More info

See in Glossary depending on the render pipeline that your Project uses.

- If your Project uses the Universal Render Pipeline (URP), see the URP package documentation microsite.

- If your Project uses the High Definition Render Pipeline (HDRP), see the HDRP package documentation microsite.

- If your Project uses the Built-in Render Pipeline, Unity displays the following properties:

| Property: | Function: |

|---|---|

| Clear Flags | Determines which parts of the screen will be cleared. This is handy when using multiple Cameras to draw different game elements. |

| Background | The color applied to the remaining screen after all elements in view have been drawn and there is no skyboxA special type of Material used to represent skies. Usually six-sided. More info See in Glossary. |

| Culling MaskAllows you to includes or omit objects to be rendered by a Camera, by Layer. See in Glossary |

Includes or omits layers of objects to be rendered by the Camera. Assigns layers to your objects in the Inspector. |

| Projection | Toggles the camera’s capability to simulate perspective. |

| Perspective | Camera will render objects with perspective intact. |

| Orthographic | Camera will render objects uniformly, with no sense of perspective. NOTE: Deferred rendering is not supported in Orthographic mode. Forward renderingA rendering path that renders each object in one or more passes, depending on lights that affect the object. Lights themselves are also treated differently by Forward Rendering, depending on their settings and intensity. More info See in Glossary is always used. |

| Size (when Orthographic is selected) | The viewportThe user’s visible area of an app on their screen. See in Glossary size of the Camera when set to Orthographic. |

| FOV Axis (when Perspective is selected) | Field of view axis. |

| Horizontal | The Camera uses a horizontal field of view axis. |

| Vertical | The Camera uses a vertical field of view axis. |

| Field of view (when Perspective is selected) | The Camera’s view angle, measured in degrees along the axis specified in the FOV Axis drop-down. |

| Physical Camera | Tick this box to enable the Physical Camera properties for this camera. When the Physical Camera properties are enabled, Unity calculates the Field of View using the properties that simulate real-world camera attributes: Focal Length, Sensor Size, and Lens Shift. Physical Camera properties are not visible in the Inspector until you tick this box. |

| Focal Length | Set the distance, in millimeters, between the camera sensor and the camera lens. Lower values result in a wider Field of View, and vice versa. When you change this value, Unity automatically updates the Field of View property accordingly. |

| Sensor Type | Specify the real-world camera format you want the camera to simulate. Choose the desired format from the list. When you choose a camera format, Unity sets the the Sensor Size > X and Y properties to the correct values automatically. If you change the Sensor Size values manually, Unity automatically sets this property to Custom. |

| Sensor Size | Set the size, in millimeters, of the camera sensor. Unity sets the X and Y values automatically when you choose the Sensor Type. You can enter custom values if needed. |

| X | The width of the sensor. |

| Y | The height of the sensor. |

| Lens Shift | Shift the lens horizontally or vertically from center. Values are multiples of the sensor size; for example, a shift of 0.5 along the X axis offsets the sensor by half its horizontal size. You can use lens shifts to correct distortion that occurs when the camera is at an angle to the subject (for example, converging parallel lines). Shift the lens along either axis to make the camera frustum oblique. |

| X | The horizontal sensor offset. |

| Y | The vertical sensor offset. |

| Gate Fit | Options for changing the size of the resolution gate (size/aspect ratio of the game view) relative to the film gate (size/aspect ratio of the Physical Camera sensor). For further information about resolution gate and film gate, see documentation on Physical Cameras. |

| Vertical | Fits the resolution gate to the height of the film gate. If the sensor aspect ratio is larger than the game view aspect ratio, Unity crops the rendered image at the sides. If the sensor aspect ratio is smaller than the game view aspect ratio, Unity overscans the rendered image at the sides. When you choose this setting, changing the sensor width (Sensor Size > X property) has no effect on the rendered image. |

| Horizontal | Fits the resolution gate to the width of the film gate. If the sensor aspect ratio is larger than the game view aspect ratio, Unity overscans the rendered image on the top and bottom. If the sensor aspect ratio is smaller than the game view aspect ratio, Unity crops the rendered image on the top and bottom. When you choose this setting, changing the sensor height (Sensor Size > Y property) has no effect on the rendered image. |

| Fill | Fits the resolution gate to either the width or height of the film gate, whichever is smaller. This crops the rendered image. |

| Overscan | Fits the resolution gate to either the width or height of the film gate, whichever is larger. This overscans the rendered image. |

| None | Ignores the resolution gate and uses the film gate only. This stretches the rendered image to fit the game view aspect ratioThe relationship of an image’s proportional dimensions, such as its width and height. See in Glossary. |

| Clipping Planes | Distances from the camera to start and stop rendering. |

| Near | The closest point relative to the camera that drawing will occur. |

| Far | The furthest point relative to the camera that drawing will occur. |

| Viewport Rect | Four values that indicate where on the screen this camera view will be drawn. Measured in Viewport Coordinates (values 0–1). |

| X | The beginning horizontal position that the camera view will be drawn. |

| Y | The beginning vertical position that the camera view will be drawn. |

| W (Width) | Width of the camera output on the screen. |

| H (Height) | Height of the camera output on the screen. |

| Depth | The camera’s position in the draw order. Cameras with a larger value will be drawn on top of cameras with a smaller value. |

| Rendering PathThe technique Unity uses to render graphics. Choosing a different path affects the performance of your game, and how lighting and shading are calculated. Some paths are more suited to different platforms and hardware than others. More info See in Glossary |

Options for defining what rendering methods will be used by the camera. |

| Use Player Settings | This camera will use whichever Rendering Path is set in the Player SettingsSettings that let you set various player-specific options for the final game built by Unity. More info See in Glossary. |

| Vertex Lit | All objects rendered by this camera will be rendered as Vertex-Lit objects. |

| Forward | All objects will be rendered with one pass per material. |

| Deferred Lighting | All objects will be drawn once without lighting, then lighting of all objects will be rendered together at the end of the render queue. NOTE: If the camera’s projection mode is set to Orthographic, this value is overridden, and the camera will always use Forward rendering. |

| Target Texture | Reference to a Render TextureA special type of Texture that is created and updated at runtime. To use them, first create a new Render Texture and designate one of your Cameras to render into it. Then you can use the Render Texture in a Material just like a regular Texture. More info See in Glossary that will contain the output of the Camera view. Setting this reference will disable this Camera’s capability to render to the screen. |

| Occlusion CullingA Unity feature that disables rendering of objects when they are not currently seen by the camera because they are obscured (occluded) by other objects. More info See in Glossary |

Enables Occlusion Culling for this camera. Occlusion Culling means that objects that are hidden behind other objects are not rendered, for example if they are behind walls. See Occlusion Culling for details. |

| Allow HDR | Enables High Dynamic Range rendering for this camera. See High Dynamic Range Rendering for details. |

| Allow MSAA | Enables multi sample antialiasingA technique for decreasing artifacts, like jagged lines (jaggies), in images to make them appear smoother. More info See in Glossary for this camera. |

| Allow Dynamic Resolution | Enables Dynamic Resolution rendering for this camera. See Dynamic ResolutionA Camera setting that allows you to dynamically scale individual render targets, to reduce workload on the GPU. More info See in Glossary for details. |

| Target Display | Defines which external device to render to. Between 1 and 8. |

Details

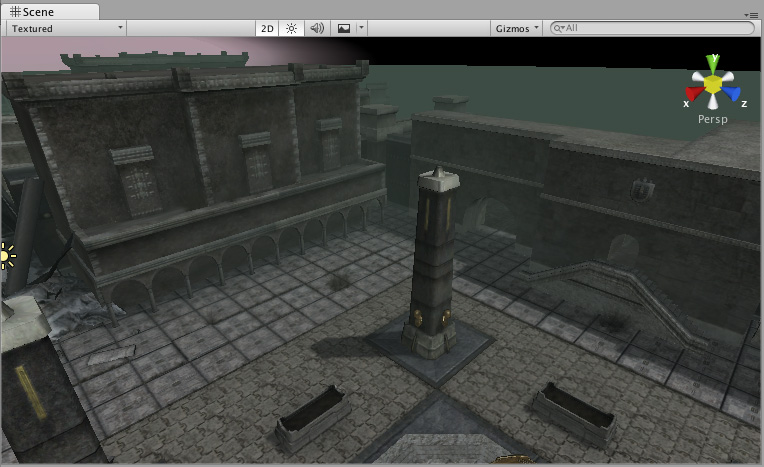

Cameras are essential for displaying your game to the player. They can be customized, scripted, or parented to achieve just about any kind of effect imaginable. For a puzzle game, you might keep the Camera static for a full view of the puzzle. For a first-person shooter, you would parent the Camera to the player character, and place it at the character’s eye level. For a racing game, you’d probably have the Camera follow your player’s vehicle.

You can create multiple Cameras and assign each one to a different Depth. Cameras are drawn from low Depth to high Depth. In other words, a Camera with a Depth of 2 will be drawn on top of a Camera with a depth of 1. You can adjust the values of the Normalized View Port Rectangle property to resize and position the Camera’s view onscreen. This can create multiple mini-views like missile cams, map views, rear-view mirrors, etc.

Render path

Unity supports different rendering paths. You should choose which one you use depending on your game content and target platform / hardware. Different rendering paths have different features and performance characteristics that mostly affect lights and shadows. The rendering path used by your Project is chosen in the Player settings. Additionally, you can override it for each Camera.

For more information on rendering paths, check the rendering paths page.

Clear Flags

Each Camera stores color and depth information when it renders its view. The portions of the screen that are not drawn in are empty, and will display the skybox by default. When you are using multiple Cameras, each one stores its own color and depth information in buffers, accumulating more data as each Camera renders. As any particular Camera in your scene renders its view, you can set the Clear Flags to clear different collections of the buffer information. To do this, choose one of the following four options:

Skybox

This is the default setting. Any empty portions of the screen will display the current Camera’s skybox. If the current Camera has no skybox set, it will default to the skybox chosen in the Lighting Window (menu: Window > RenderingThe process of drawing graphics to the screen (or to a render texture). By default, the main camera in Unity renders its view to the screen. More info

See in Glossary > Lighting). It will then fall back to the Background Color. Alternatively a Skybox component can be added to the camera. If you want to create a new Skybox, you can use this guide.

Solid color

Any empty portions of the screen will display the current Camera’s Background Color.

Depth only

If you want to draw a player’s gun without letting it get clipped inside the environment, set one Camera at Depth 0 to draw the environment, and another Camera at Depth 1 to draw the weapon alone. Set the weapon Camera’s Clear Flags to depth only. This will keep the graphical display of the environment on the screen, but discard all information about where each object exists in 3-D space. When the gun is drawn, the opaque parts will completely cover anything drawn, regardless of how close the gun is to the wall.

Don’t clear

This mode does not clear either the color or the depth bufferA memory store that holds the z-value depth of each pixel in an image, where the z-value is the depth for each rendered pixel from the projection plane. More info

See in Glossary. The result is that each frame is drawn over the next, resulting in a smear-looking effect. This isn’t typically used in games, and would more likely be used with a custom shaderA small script that contains the mathematical calculations and algorithms for calculating the Color of each pixel rendered, based on the lighting input and the Material configuration. More info

See in Glossary.

Note that on some GPUs (mostly mobile GPUs), not clearing the screen might result in the contents of it being undefined in the next frame. On some systems, the screen may contain the previous frame image, a solid black screen, or random colored pixelsThe smallest unit in a computer image. Pixel size depends on your screen resolution. Pixel lighting is calculated at every screen pixel. More info

See in Glossary.

Clip Planes

The Near and Far Clip Plane properties determine where the Camera’s view begins and ends. The planes are laid out perpendicular to the Camera’s direction and are measured from its position. The Near plane is the closest location that will be rendered, and the Far plane is the furthest.

The clipping planes also determine how depth buffer precision is distributed over the scene. In general, to get better precision you should move the Near plane as far as possible.

Note that the near and far clip planes together with the planes defined by the field of view of the camera describe what is popularly known as the camera frustum. Unity ensures that when rendering your objects those which are completely outside of this frustum are not displayed. This is called Frustum Culling. Frustum Culling happens irrespective of whether you use Occlusion Culling in your game.

For performance reasons, you might want to cull small objects earlier. For example, small rocks and debris could be made invisible at much smaller distance than large buildings. To do that, put small objects into a separate layer and set up per-layer cull distances using Camera.layerCullDistances script function.

Culling Mask

The Culling Mask is used for selectively rendering groups of objects using Layers. More information on using layers can be found here.

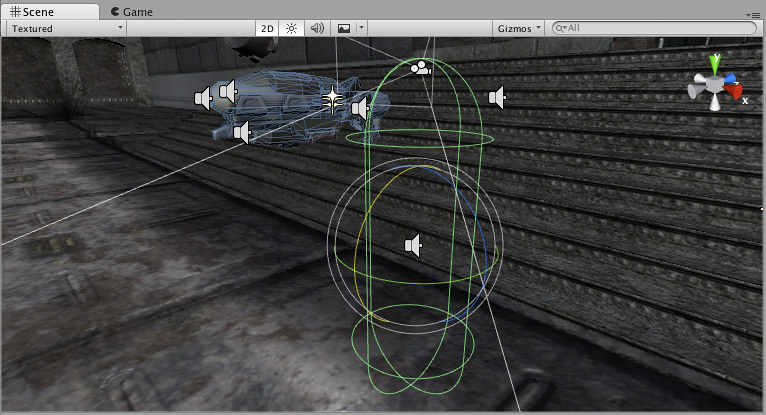

Normalized Viewport Rectangles

Normalized Viewport Rectangle is specifically for defining a certain portion of the screen that the current camera view will be drawn upon. You can put a map view in the lower-right hand corner of the screen, or a missile-tip view in the upper-left corner. With a bit of design work, you can use Viewport Rectangle to create some unique behaviors.

It’s easy to create a two-player split screen effect using Normalized Viewport Rectangle. After you have created your two cameras, change both camera’s H values to be 0.5 then set player one’s Y value to 0.5, and player two’s Y value to 0. This will make player one’s camera display from halfway up the screen to the top, and player two’s camera start at the bottom and stop halfway up the screen.

Orthographic

Marking a Camera as Orthographic removes all perspective from the Camera’s view. This is mostly useful for making isometric or 2D games.

Note that fog is rendered uniformly in orthographic camera mode and may therefore not appear as expected. This is because the Z coordinate of the post-perspective space is used for the fog “depth”. This is not strictly accurate for an orthographic camera but it is used for its performance benefits during rendering.

Render Texture

This will place the camera’s view onto a TextureAn image used when rendering a GameObject, Sprite, or UI element. Textures are often applied to the surface of a mesh to give it visual detail. More info

See in Glossary that can then be applied to another object. This makes it easy to create sports arena video monitors, surveillance cameras, reflections etc.

Target display

A camera has up to 8 target display settings. The camera can be controlled to render to one of up to 8 monitors. This is supported only on PC, Mac and Linux. In Game View the chosen display in the Camera Inspector will be shown.

- 2018–10–05 Page amended

- Physical Camera options added in Unity 2018.2

- Gate Fit options added in Unity 2018.3