Getting started with AR development in Unity

To get started with ARAugmented Reality (AR) uses computer graphics or video composited on top of a live video feed to augment the view and create interaction with real and virtual objects.

See in Glossary development, Unity recommends using AR Foundation to create your application for Unity’s supported handheld AR and wearable AR devices.

AR Foundation allows you to work with augmented reality platforms in a multi-platform way within Unity. This package presents an interface for Unity developers to use, but doesn’t implement any AR features on its own.

To use AR Foundation on a target device, you also need to download and install separate packages for each of the target platforms officially supported by Unity:

- ARCore XR Plug-in on Android

- ARKit XR Plug-in on iOS

- Magic Leap XR Plug-in on Magic Leap

- Windows XR Plug-in on HoloLens

For instructions on how to configure your Project using the XRAn umbrella term encompassing Virtual Reality (VR), Augmented Reality (AR) and Mixed Reality (MR) applications. Devices supporting these forms of interactive applications can be referred to as XR devices. More info

See in Glossary Plug-inA set of code created outside of Unity that creates functionality in Unity. There are two kinds of plug-ins you can use in Unity: Managed plug-ins (managed .NET assemblies created with tools like Visual Studio) and Native plug-ins (platform-specific native code libraries). More info

See in Glossary Management system, see the Configuring your unity Project for XR page.

AR Foundation supports the following features:

| Feature | Description |

|---|---|

| Device tracking | Track the device’s position and orientation in physical space. |

| Raycast | Commonly used to determine where virtual content will appear, where a ray (defined by an origin and direction) intersects with a real-world feature detected and/or tracked by the AR device. Unity has built-in functions that allow you to use raycasting in your AR app. |

| Plane detection | Detect the size and location of horizontal and vertical surfaces (e.g. coffee table, walls). These surfaces are called “planes”. |

| Reference points | Track the positions of planes and feature points over time. |

| Point cloud detection | Detect visually distinct features in the captured cameraA component which creates an image of a particular viewpoint in your scene. The output is either drawn to the screen or captured as a texture. More info See in Glossary image and use these points to understand where the device is relative to the world around it. |

| Gestures | Recognize gestures as input events based on human hands. |

| Face tracking | Access face landmarks, a meshThe main graphics primitive of Unity. Meshes make up a large part of your 3D worlds. Unity supports triangulated or Quadrangulated polygon meshes. Nurbs, Nurms, Subdiv surfaces must be converted to polygons. More info See in Glossary representation of detected faces, and blend shape information, which can feed into a facial animation rig. The Face Manager configures devices for face tracking and creates GameObjectsThe fundamental object in Unity scenes, which can represent characters, props, scenery, cameras, waypoints, and more. A GameObject’s functionality is defined by the Components attached to it. More info See in Glossary for each detected face. |

| 2D image tracking | Detect specific 2D images in the environment. The Tracked Image Manager automatically creates GameObjects that represent all recognized images. You can change an AR application based on the presence of specific images. |

| 3D objectA 3D GameObject such as a cube, terrain or ragdoll. More info See in Glossary tracking |

Import digital representations of real-world objects into your Unity application and detect them in the environment. The Tracked Object Manager creates GameObjects for each detected physical object to enable applications to change based on the presence of specific real-world objects. |

| Environment probes | Detect lighting and color information in specific areas of the environment, which helps enable 3D content to blend seamlessly with the surroundings. The Environment Probe Manager uses this information to automatically create cubemapsA collection of six square textures that can represent the reflections in an environment or the skybox drawn behind your geometry. The six squares form the faces of an imaginary cube that surrounds an object; each face represents the view along the directions of the world axes (up, down, left, right, forward and back). More info See in Glossary in Unity. |

| Meshing | Generate triangle meshes that correspond to the physical space, expanding the ability to interact with representations of the physical environment and/or visually overlay the details on it. |

| 2D and 3D body tracking | Provides 2D (screen-space) or 3D (world-space) representations of humans recognized in the camera frame. For 2D detection, humans are represented by a hierarchy of seventeen jointsA physics component allowing a dynamic connection between Rigidbody components, usually allowing some degree of movement such as a hinge. More info See in Glossary with screen-space coordinates. For 3D detection, humans are represented by a hierarchy of ninety-three joints with world-space transforms. |

| Human segmentation | The Human Body Subsystem provides apps with human stencil and depth segmentation images. The stencil segmentation image identifies, for each pixelThe smallest unit in a computer image. Pixel size depends on your screen resolution. Pixel lighting is calculated at every screen pixel. More info See in Glossary, whether the pixel contains a person. The depth segmentation image consists of an estimated distance from the device for each pixel that correlates to a recognized human. Using these segmentation images together allows for rendered 3D content to be realistically occluded by real-world humans. |

| Occlusion | Apply distance to objects in the physical world to rendered 3D content, which achieves a realistic blending of physical and virtual objects. |

| Participant tracking | Track the position and orientation of other devices in a shared AR session. |

AR platform support

AR Foundation doesn’t implement any AR features on its own. Instead, it defines a multi-platform API that allows developers to work with functionality common to multiple platforms.

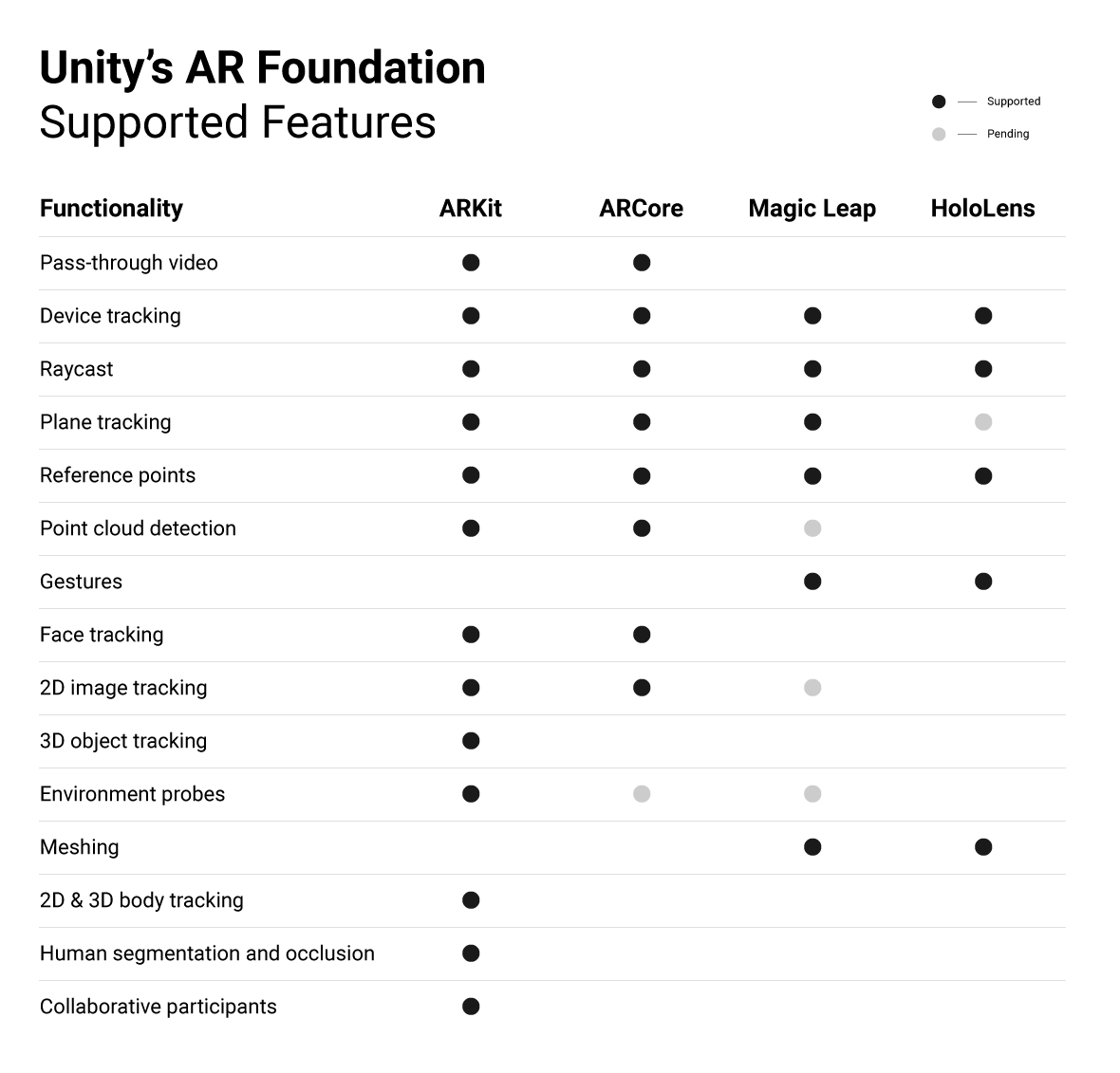

AR Foundation supports the following features across different platforms:

For more information on how to download and use AR Foundation, see the AR Foundation package documentation.